All Flags Such As Porn And Upsetting-offensive Are Query-independent

Onlines

Mar 17, 2025 · 5 min read

Table of Contents

All Flags Such as Porn and Upsetting-Offensive are Query-Independent: A Deep Dive into Content Filtering and Contextual Understanding

The internet, a boundless ocean of information, also harbors a vast and unsettling undercurrent of explicit, offensive, and harmful content. The challenge of effectively filtering this content while preserving freedom of expression is a complex and ongoing struggle. This article delves into the crucial concept of query independence regarding flags for pornographic and upsetting/offensive material, exploring its implications for content filtering systems, ethical considerations, and the future of online safety. We'll examine why simply labeling content as "porn" or "offensive" isn't sufficient and how context plays a vital role in accurate and responsible moderation.

What Does "Query-Independent" Mean in This Context?

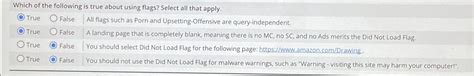

In the realm of content moderation, "query-independent" refers to the inherent characteristics of the content itself, irrespective of the user's search query or intent. A flag indicating pornography, for example, should be triggered by the explicit nature of the content itself, not by the user's search terms. Whether someone searches for "kitten videos" or "explicit adult content," a truly query-independent system will flag the same pornographic image consistently. This is distinct from query-dependent systems, which might only flag content if it directly matches specific keywords in a user's search. Query independence is paramount for ensuring consistency and preventing circumvention of filtering mechanisms.

The Limitations of Keyword-Based Filtering

Traditional keyword-based filtering systems are notoriously inadequate. They rely on a simple matching of words or phrases with a pre-defined list of flagged terms. This approach suffers from several critical flaws:

-

Easily Circumvented: Offenders can easily circumvent these systems by using synonyms, misspellings, or coded language. Replacing "pornography" with "adult entertainment" or using phonetic spellings bypasses simple keyword filters.

-

Contextual Blindness: Keyword-based systems lack contextual understanding. A word like "breast" can be innocuous in a medical article or offensive in a sexually explicit image. The system's inability to discern context leads to both false positives (flagging benign content) and false negatives (missing harmful content).

-

Scalability Issues: Maintaining and updating extensive keyword lists is a monumental task. The sheer volume of new words, slang, and coded language necessitates constant monitoring and updates, making it challenging to keep pace with evolving online trends.

The Importance of Content Analysis Techniques

To achieve true query independence, sophisticated content analysis techniques are necessary. These techniques go beyond simple keyword matching and delve into the semantic meaning and visual characteristics of the content:

-

Image Recognition: Advanced algorithms can identify explicit images based on visual patterns and features. These algorithms learn from massive datasets of labeled images, improving their accuracy over time.

-

Natural Language Processing (NLP): NLP techniques analyze text to identify potentially offensive language, hate speech, and other harmful content. These methods can consider the context of words and phrases, improving the accuracy of flagging.

-

Machine Learning (ML): ML models can be trained on vast datasets of flagged and unflagged content to learn complex patterns and relationships. This enables the system to adapt to new and evolving forms of offensive material.

Ethical Considerations and the Nuances of "Offensive" Content

The definition of "offensive" is inherently subjective and culturally dependent. What one person finds offensive, another might find harmless or even humorous. This subjectivity presents significant challenges for content moderation systems. A query-independent system must carefully consider these ethical implications:

-

Balancing Freedom of Expression with Safety: The goal is not to censor legitimate speech but to prevent the spread of genuinely harmful and dangerous content. The line between offensive and protected speech can be blurry, requiring careful consideration and potentially human oversight.

-

Cultural Sensitivity: Content moderation systems should be sensitive to different cultural norms and values. What is considered offensive in one culture might be acceptable in another. A truly effective system needs to adapt to this cultural diversity.

-

Transparency and Accountability: Content moderation processes should be transparent and accountable. Users should have a clear understanding of the criteria used for flagging content and the process for appealing decisions.

The Role of Human Moderation in a Query-Independent System

While automated systems can significantly enhance the efficiency of content moderation, human oversight remains crucial. Complex cases requiring nuanced judgment, such as satirical content that might be misconstrued as offensive, demand human intervention. A hybrid approach, combining automated content analysis with human review, offers the most robust and ethically responsible solution.

Future Directions in Query-Independent Content Filtering

The field of query-independent content filtering is constantly evolving. Future developments are likely to involve:

-

Improved AI and ML models: Continued advancements in artificial intelligence and machine learning will lead to more accurate and efficient content analysis techniques.

-

Contextual awareness: Future systems will place a greater emphasis on contextual understanding, ensuring that content is assessed within its proper context.

-

User feedback mechanisms: Incorporating user feedback into the moderation process can help refine algorithms and improve accuracy over time.

-

Collaborative filtering approaches: Leveraging the collective wisdom of a community to identify and flag harmful content can enhance the effectiveness of moderation efforts.

Conclusion: Towards a Safer and More Responsible Online Environment

The pursuit of query-independent content filtering is essential for creating a safer and more responsible online environment. While challenges remain, advancements in content analysis techniques, coupled with ethical considerations and human oversight, offer a promising path towards achieving this goal. Moving beyond simple keyword-based systems and embracing the power of AI and ML, while always prioritizing transparency and accountability, is vital for navigating the complex landscape of online content moderation and protecting users from harmful and offensive materials. The ultimate aim is a system that flags content based on its inherent characteristics, not the user's intentions, ensuring a consistent and effective approach to safeguarding online communities.

Latest Posts

Latest Posts

-

11 3 8 Configure Tcp Ip Settings On Windows 11

Mar 17, 2025

-

Es Donde Pones La Cabeza Cuando Duermes

Mar 17, 2025

-

Match The Activity To The Most Appropriate Cost Driver

Mar 17, 2025

-

Narrative Of The Life Of Frederick Douglass Chapter Summary

Mar 17, 2025

-

Quotes From The Masque Of The Red Death

Mar 17, 2025

Related Post

Thank you for visiting our website which covers about All Flags Such As Porn And Upsetting-offensive Are Query-independent . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.