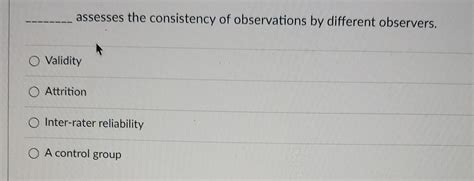

________ Assesses The Consistency Of Observations By Different Observers.

Onlines

Mar 10, 2025 · 7 min read

Table of Contents

Inter-rater Reliability: Assessing the Consistency of Observations by Different Observers

Inter-rater reliability, also known as inter-observer reliability, is a crucial concept in research and various fields requiring objective assessment. It assesses the degree of agreement among raters who independently judge or observe the same phenomenon. This consistency is paramount when multiple individuals are involved in data collection, particularly in qualitative research, clinical settings, and observational studies. High inter-rater reliability demonstrates that the observations are not significantly influenced by the individual biases or interpretations of each observer, thus increasing the validity and trustworthiness of the findings. This article will delve deep into the multifaceted aspects of inter-rater reliability, exploring its importance, various methods for calculating it, factors affecting reliability, and strategies for enhancing it.

The Significance of Inter-rater Reliability

The importance of inter-rater reliability cannot be overstated. Its impact resonates across various disciplines, ensuring the robustness and generalizability of research findings. Here's why it's so critical:

-

Minimizing Bias: Human judgment is inherently subjective. Different observers may interpret the same event or behavior differently, leading to inconsistencies in data. High inter-rater reliability indicates a reduction in this subjective bias, ensuring the observations are more objective and less influenced by individual perspectives.

-

Enhancing Validity: If multiple observers consistently agree on their observations, it strengthens the validity of the measurement instrument or assessment method being used. This confirms that the tool is accurately capturing the phenomenon it intends to measure.

-

Improving Reliability of Results: High inter-rater reliability increases the confidence in the overall research findings. It suggests that the results are less likely to be due to chance or individual observer error, and are more likely to be a true reflection of the phenomenon under investigation.

-

Facilitating Replicability: Studies with high inter-rater reliability are more easily replicated. Other researchers can use the same methods and expect to obtain similar results, enhancing the generalizability and credibility of the findings.

-

Ensuring Accuracy in Clinical Settings: In healthcare, consistent assessment and diagnosis are essential. High inter-rater reliability among clinicians ensures that patients receive accurate and consistent care, regardless of who is assessing them.

Methods for Calculating Inter-rater Reliability

Several statistical methods are used to calculate inter-rater reliability, each suited to different types of data and research designs. The choice of method depends on the nature of the data (nominal, ordinal, interval, or ratio) and the number of raters involved. Some common methods include:

1. Percent Agreement:

This is the simplest method, calculating the percentage of times the raters agreed on their observations. It is suitable for nominal data (e.g., classifying behaviors into categories). However, it's limited because it doesn't account for chance agreement.

Formula: (Number of agreements / Total number of observations) x 100

Example: If two raters observed 100 instances and agreed on 80 of them, the percent agreement is 80%. While simple, this ignores the possibility of agreement occurring by chance.

2. Cohen's Kappa (κ):

Cohen's Kappa is a more sophisticated measure that corrects for chance agreement. It's suitable for nominal data and is widely used because it accounts for the probability of agreement occurring by chance alone. Kappa values range from -1 to +1, with higher values indicating greater agreement. A value of 0 suggests agreement is no better than chance, while a value of 1 indicates perfect agreement.

Interpretation of Cohen's Kappa:

- 0.00-0.20: Slight agreement

- 0.21-0.40: Fair agreement

- 0.41-0.60: Moderate agreement

- 0.61-0.80: Substantial agreement

- 0.81-1.00: Almost perfect agreement

3. Fleiss' Kappa:

This is an extension of Cohen's Kappa used when more than two raters are involved. It accounts for chance agreement and provides a single measure of inter-rater reliability across multiple raters. The interpretation of Fleiss' Kappa is similar to Cohen's Kappa.

4. Intraclass Correlation Coefficient (ICC):

The ICC is a more versatile measure suitable for various data types (interval, ratio, and ordinal). It assesses the consistency of measurements among raters, considering both the agreement and variability of the ratings. The ICC ranges from 0 to 1, with higher values indicating greater reliability. Different ICC models exist depending on the assumptions about the data and the research design.

5. Weighted Kappa:

Weighted kappa is used when the levels of disagreement are not equally important. For example, in a rating scale, the difference between a score of 1 and 2 might be more significant than the difference between a score of 4 and 5. Weighted kappa assigns weights to different levels of disagreement, reflecting their relative importance.

Factors Affecting Inter-rater Reliability

Several factors can influence inter-rater reliability, and understanding these factors is crucial for improving the consistency of observations. These factors include:

-

Clarity of Operational Definitions: Ambiguous definitions of the behaviors or events being observed can lead to inconsistencies among raters. Clear, concise, and specific operational definitions are essential.

-

Training and Calibration: Providing raters with thorough training on the observation protocol and calibrating their understanding through practice sessions can significantly improve agreement.

-

Rater Experience: Experienced raters tend to have higher inter-rater reliability compared to inexperienced raters. Experience leads to better judgment and a more consistent application of the observation criteria.

-

Complexity of the Observation Task: Observing complex behaviors or events is more prone to inconsistencies than observing simple ones. Breaking down the observation task into smaller, manageable components can improve reliability.

-

Observer Bias: Unconscious biases can affect how observers interpret and record observations. Awareness of potential biases and strategies to minimize them are important.

-

Data Collection Method: The method used to collect data (e.g., checklists, rating scales, video recordings) can influence inter-rater reliability. Structured methods generally lead to higher reliability than unstructured methods.

Strategies for Enhancing Inter-rater Reliability

Several strategies can be employed to improve inter-rater reliability:

-

Develop Clear Operational Definitions: Establish clear, concise, and unambiguous operational definitions for all variables and behaviors being observed. This minimizes ambiguity and ensures consistency among raters.

-

Provide Comprehensive Training: Conduct comprehensive training for all raters, focusing on the observation protocol, data recording procedures, and the interpretation of operational definitions. Use case studies and practice sessions to ensure a common understanding.

-

Establish a Calibration Process: Implement a calibration process to standardize the ratings of raters. This involves having raters independently code the same data and discussing discrepancies to reach a consensus.

-

Pilot Testing: Conduct a pilot study to test the observation protocol and identify potential problems with reliability before the main study. This allows for adjustments to the protocol to improve consistency.

-

Use Multiple Raters: Including multiple raters helps to identify and minimize individual biases. The average of multiple raters' observations is generally more reliable than a single rater's observations.

-

Utilize Technology: Technology can assist in enhancing inter-rater reliability. Video recordings can be used for review and discussion, ensuring consistent interpretation. Software can also help automate data analysis and calculations of reliability coefficients.

-

Blind Ratings: When possible, raters should be blind to other raters' judgments and any other potentially biasing information to minimize influence.

-

Regular Feedback and Monitoring: Provide regular feedback to raters on their performance and monitor inter-rater reliability throughout the study. Address any inconsistencies promptly.

Conclusion

Inter-rater reliability is a critical aspect of any research or assessment that involves multiple observers. It ensures that the observations are consistent and not significantly influenced by individual biases or interpretations. By employing appropriate statistical methods to calculate reliability, understanding factors affecting reliability, and implementing strategies to improve it, researchers can greatly enhance the validity, trustworthiness, and generalizability of their findings. The consistent application of these principles across diverse fields strengthens the scientific rigor and ensures the accuracy and reliability of the conclusions drawn from observational data. The pursuit of high inter-rater reliability is a continuous process requiring attention to detail, rigorous training, and a commitment to objective assessment. It's an investment that ultimately yields richer, more credible, and more impactful research and assessments.

Latest Posts

Latest Posts

-

To Kill A Mockingbird Summary Each Chapter

Mar 10, 2025

-

Unit 6a The Nature Of Waves Practice Problems Answer Key

Mar 10, 2025

-

Nos Van A Servir El Cafe

Mar 10, 2025

-

Which Statements Characterize Spanish Settlement In Texas

Mar 10, 2025

-

The Narrow Road To The Deep North Basho Summary

Mar 10, 2025

Related Post

Thank you for visiting our website which covers about ________ Assesses The Consistency Of Observations By Different Observers. . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.