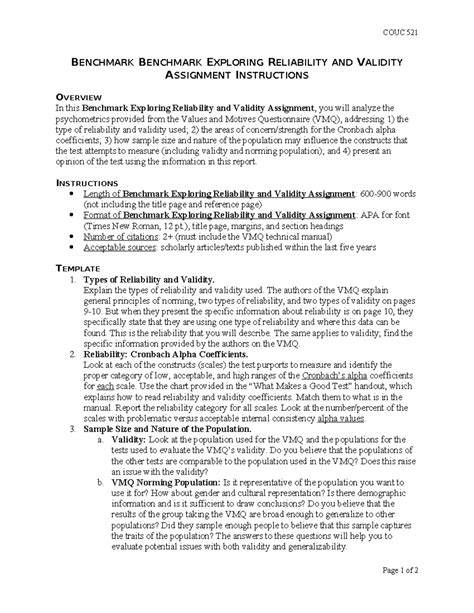

Benchmark Exploring Reliability And Validity Assignment

Onlines

Mar 13, 2025 · 6 min read

Table of Contents

Benchmark Exploring Reliability and Validity Assignment: A Comprehensive Guide

This comprehensive guide delves into the crucial aspects of reliability and validity in research, particularly within the context of benchmark assignments. Understanding and demonstrating these concepts are vital for producing high-quality, credible research. We’ll explore various methods for assessing reliability and validity, providing practical examples and strategies for strengthening your research design and analysis.

What are Reliability and Validity?

Before diving into benchmarks, let's clarify these core concepts:

Reliability refers to the consistency and stability of your measurement instrument. A reliable instrument produces similar results under consistent conditions. If you administer a test multiple times, a reliable test will yield similar scores. Think of it as the repeatability of your findings.

Validity refers to the accuracy of your measurement instrument. A valid instrument measures what it intends to measure. If you're measuring intelligence, a valid test actually assesses intelligence, not something else like memory or reading comprehension. It's about the accuracy of your inferences.

These two concepts are intertwined. A test can be reliable but not valid (e.g., a consistently inaccurate scale). However, a test cannot be valid without being reliable. Reliable measurements are a necessary, but not sufficient, condition for validity.

Types of Reliability

Several methods assess reliability. Understanding the nuances is key to choosing the appropriate method for your benchmark assignment:

1. Test-Retest Reliability:

This assesses the consistency of a measure over time. The same test is administered to the same group on two different occasions. High correlation between the scores indicates high test-retest reliability. The time interval between tests is crucial; too short might lead to memory effects, while too long might reflect real changes in the construct being measured.

2. Parallel-Forms Reliability:

Two equivalent forms of the same test are administered to the same group. High correlation between the scores on both forms indicates high parallel-forms reliability. This method helps control for practice effects that might influence test-retest reliability.

3. Internal Consistency Reliability:

This evaluates the consistency of items within a single test. Common methods include:

-

Cronbach's alpha: A widely used statistic that measures the average correlation among all possible item pairs. Generally, a Cronbach's alpha above 0.7 is considered acceptable, but the acceptable threshold depends on the context and the nature of the construct being measured.

-

Split-half reliability: The test is divided into two halves, and the scores on the two halves are correlated. This method assesses the consistency of items within each half.

4. Inter-rater Reliability:

When using subjective measures (e.g., observations, interviews), inter-rater reliability assesses the agreement between different raters. Statistical methods like Cohen's kappa or percentage agreement are used to quantify the level of agreement. High inter-rater reliability indicates that different raters consistently interpret and score the data.

Types of Validity

Establishing validity is more complex than reliability and involves different types of evidence:

1. Content Validity:

This assesses whether the instrument adequately covers all aspects of the construct being measured. It requires a careful examination of the test content to ensure it aligns with the definition of the construct. Expert judgment is often crucial in establishing content validity.

2. Criterion-Related Validity:

This assesses the extent to which the instrument's scores correlate with an external criterion. Two subtypes exist:

-

Concurrent validity: The instrument's scores are correlated with a criterion measured at the same time.

-

Predictive validity: The instrument's scores predict future performance on a criterion.

For example, an aptitude test might have high predictive validity if it accurately predicts future job performance.

3. Construct Validity:

This is the most comprehensive type of validity and involves converging evidence from multiple sources to demonstrate that the instrument measures the intended construct. It encompasses several aspects:

-

Convergent validity: The instrument's scores correlate with other measures of the same or similar constructs.

-

Discriminant validity: The instrument's scores do not correlate with measures of unrelated constructs.

-

Factor analysis: A statistical technique used to identify underlying latent factors that contribute to the observed scores. This can provide evidence for the construct's dimensionality and help refine the instrument.

Applying Reliability and Validity to Benchmark Assignments

Benchmark assignments, often used in educational settings or performance evaluations, require rigorous attention to reliability and validity. Let's consider some examples:

Example 1: Assessing Student Learning Outcomes

A benchmark assessment designed to measure student understanding of a specific topic needs to demonstrate both reliability and validity. Reliability can be established through internal consistency (Cronbach's alpha) if the assessment uses multiple-choice questions. Validity can be addressed by ensuring the questions accurately reflect the learning objectives (content validity) and that high scores correlate with successful performance in subsequent courses (predictive validity).

Example 2: Evaluating Employee Performance

A performance appraisal system using multiple rating scales must show inter-rater reliability (consistency across different raters). Validity can be addressed by ensuring the rating scales accurately reflect the key job responsibilities (content validity) and that performance ratings correlate with objective performance indicators (criterion-related validity).

Example 3: Comparing the Effectiveness of Different Teaching Methods

A study comparing two teaching methods needs to ensure that the measurement instruments used to assess student learning are both reliable and valid. Reliability can be addressed through test-retest reliability or parallel-forms reliability, while validity can be examined through content validity (do the assessments accurately measure the learning outcomes?) and construct validity (do the assessments measure the intended constructs, and not something else?).

Strategies for Enhancing Reliability and Validity

Several strategies can improve the reliability and validity of your benchmark assignment:

-

Clearly Define Constructs: Precisely define the constructs you are measuring. Ambiguity can lead to both low reliability and low validity.

-

Use Established Instruments: Whenever possible, use well-established and validated instruments. This saves time and effort and provides a strong foundation for your research.

-

Develop Clear Instructions: Provide clear and concise instructions for participants to minimize ambiguity and ensure consistent administration of the instrument.

-

Pilot Test Your Instrument: Always pilot test your instrument with a small sample before administering it to the larger sample. This helps identify any problems with clarity, ambiguity, or practicality.

-

Use Multiple Measures: Using multiple measures of the same construct can strengthen the validity of your findings. Triangulation from different sources provides stronger evidence.

-

Control for Extraneous Variables: In experimental designs, control for extraneous variables that might confound the results. Random assignment to groups helps control for these variables.

-

Appropriate Statistical Analyses: Use appropriate statistical techniques to analyze the data and assess reliability and validity. Choose analyses that match the level of measurement and the research design.

Conclusion

Understanding and demonstrating reliability and validity are crucial for creating robust benchmark assignments. This involves selecting appropriate methods for assessing reliability (test-retest, internal consistency, inter-rater) and validity (content, criterion-related, construct). By carefully defining constructs, using established instruments, piloting your assessments, and employing appropriate statistical analyses, you can enhance the quality and credibility of your research. Remember, strong reliability and validity contribute significantly to the overall trustworthiness and impact of your findings, making your benchmark assignment more meaningful and impactful. Always strive for transparency and rigorous methodology to build confidence in your results. This attention to detail not only strengthens your specific assignment but also develops valuable skills applicable throughout your academic and professional career.

Latest Posts

Latest Posts

-

The Letter S College Essay Pdf

Mar 14, 2025

-

Infer Geologic History From A New Mexico Outcrop

Mar 14, 2025

-

For Whom The Bell Tolls Summary

Mar 14, 2025

-

The Last Time I Bought This Product It Cost 20 00

Mar 14, 2025

-

How Can An Operation Assist Customers With Food Allergies

Mar 14, 2025

Related Post

Thank you for visiting our website which covers about Benchmark Exploring Reliability And Validity Assignment . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.