Speech Synthesizers Use _____ To Determine Context Before Outputting.

Onlines

Mar 09, 2025 · 6 min read

Table of Contents

Speech Synthesizers Use Contextual Understanding to Enhance Naturalness

Speech synthesizers, also known as text-to-speech (TTS) systems, have come a long way. Gone are the days of robotic, monotone voices. Modern TTS systems strive for naturalness and fluency, a feat made possible, in large part, by their ability to understand and utilize context. Before outputting synthesized speech, these sophisticated systems employ a variety of techniques to determine the context of the input text, significantly improving the quality and realism of the generated audio.

The Importance of Context in Speech Synthesis

Context is crucial in speech because it influences numerous aspects of pronunciation, intonation, and phrasing. Without understanding the context, a TTS system might produce unnatural-sounding speech that lacks the nuances of human communication. Consider these examples:

-

Pronunciation: The word "read" can be pronounced differently depending on its context. In the sentence "I read a book," it's pronounced differently than in "I will read aloud." A context-aware system recognizes this and adjusts pronunciation accordingly.

-

Intonation: The intonation of a sentence conveys meaning and emotion. A statement like "I'm happy" will have a different intonation than a question "Are you happy?". Contextual analysis allows the synthesizer to adjust pitch and rhythm to reflect this difference, leading to more natural-sounding speech.

-

Emphasis: Certain words carry more weight than others within a sentence. For example, in "I ordered a large pizza," the emphasis falls on "large". A sophisticated system identifies this emphasis based on the surrounding words and the overall meaning, ensuring proper stress and emphasis during speech generation.

-

Disambiguation: Many words have multiple meanings. Context helps disambiguate these words, ensuring the correct meaning is conveyed. For example, "bank" can refer to a financial institution or the side of a river. Contextual understanding enables the system to select the appropriate meaning.

Methods for Contextual Understanding in Speech Synthesis

Several methods are employed by speech synthesizers to determine context:

1. Part-of-Speech (POS) Tagging:

POS tagging is a fundamental technique that assigns grammatical tags to each word in the input text. These tags identify whether a word is a noun, verb, adjective, adverb, etc. This information provides crucial grammatical context, informing the system about the role each word plays in the sentence. For example, identifying a word as a noun helps determine its potential pronunciation variations and whether it should be stressed.

2. Named Entity Recognition (NER):

NER systems identify and classify named entities in the text, such as people's names, organizations, locations, dates, and monetary values. Recognizing these entities allows the system to handle them appropriately. For instance, proper nouns often have specific pronunciation rules and should be treated differently from common nouns. NER helps the system achieve accurate pronunciation and intonation when dealing with names and other named entities.

3. Semantic Role Labeling (SRL):

SRL goes beyond simple POS tagging by identifying the semantic roles of words within a sentence. It analyzes the relationships between words and determines their functions within the overall sentence structure. This detailed understanding of the sentence's meaning helps in generating more natural and accurate prosody (intonation and rhythm). For example, SRL helps distinguish the agent, patient, and instrument in a sentence like "The chef (agent) prepared the meal (patient) with a knife (instrument)."

4. Syntactic Parsing:

Syntactic parsing involves analyzing the grammatical structure of the sentence to create a parse tree. This tree represents the hierarchical relationships between words and phrases, providing a detailed representation of the sentence's structure. The system uses this structural information to determine the appropriate phrasing, pauses, and intonation for natural speech generation.

5. Word Embeddings and Contextualized Word Representations:

Word embeddings, such as Word2Vec and GloVe, represent words as dense vectors in a high-dimensional space. The position of a word in this space reflects its semantic meaning and relationships with other words. Contextualized word representations, like those produced by BERT and ELMo, go further by considering the context in which a word appears. This allows the system to capture the nuances of word meaning and use this information to improve pronunciation, intonation, and overall naturalness.

6. Language Models:

Large language models (LLMs) are trained on massive text corpora and can predict the probability of a word sequence given its context. They understand the underlying semantic relationships between words and sentences. This is particularly useful for handling ambiguous words and sentences by selecting the most probable interpretation based on the surrounding context. The use of LLMs allows the system to generate more fluent and coherent speech.

7. Dialogue Management Systems:

For conversational TTS applications, dialogue management systems are crucial. These systems maintain a context of the ongoing conversation, tracking the topics discussed and the relationships between utterances. This context is crucial for generating appropriate responses and maintaining a coherent flow of conversation. The system uses the conversational history to anticipate what might be said next and generate appropriate intonation and phrasing.

Advanced Techniques for Enhanced Contextual Understanding

Beyond the fundamental techniques described above, some advanced methods are further enhancing the ability of speech synthesizers to understand and utilize context:

-

Machine Learning and Deep Learning: Sophisticated machine learning and deep learning algorithms are employed to train the models that perform contextual analysis. These models learn from large datasets of text and audio, improving their ability to identify patterns and relationships that reflect context.

-

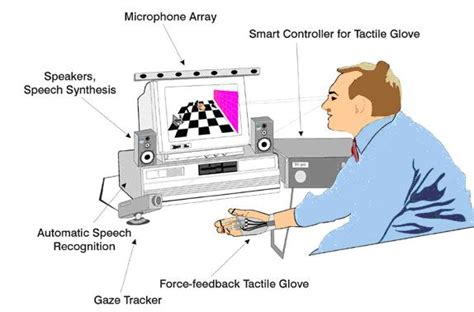

Multimodal Context: Some advanced systems incorporate multimodal context, considering not just text but also visual or other sensory information. For example, a system might use visual cues to understand the emotion or context of a situation, leading to more appropriate intonation and expression in the synthesized speech.

-

Personalized Context: The future of TTS lies in personalized speech synthesis. Systems are being developed that can adapt to an individual's voice, speaking style, and preferences, creating a truly customized and natural-sounding experience.

The Impact of Contextual Understanding on Speech Synthesis Quality

The impact of contextual understanding on the quality of speech synthesis is undeniable. Context-aware systems generate speech that is:

-

More natural-sounding: The use of context results in more realistic intonation, phrasing, and pronunciation, making the synthesized speech sound more human-like.

-

More intelligible: Accurate contextual understanding leads to improved clarity and reduces ambiguity, improving the overall intelligibility of the synthesized speech.

-

More engaging: The use of context allows for more expressive and emotionally nuanced speech, making the synthesized speech more engaging for the listener.

Conclusion: The Future of Context-Aware Speech Synthesis

Contextual understanding is a critical aspect of modern speech synthesis. The continuous advancements in natural language processing (NLP), machine learning, and deep learning are pushing the boundaries of what's possible. As these technologies continue to improve, we can expect even more natural-sounding and engaging speech synthesis systems that seamlessly integrate into our daily lives. The integration of multimodal context, personalized models, and sophisticated dialogue management systems will pave the way for truly human-like conversational TTS, blurring the line between human and machine communication. The future of speech synthesis is undoubtedly deeply intertwined with the ability of these systems to accurately and effectively utilize context.

Latest Posts

Latest Posts

-

The Latin Root Tactus Is Part Of The Word Tangent

Mar 09, 2025

-

Question Hamburger You Are Given Either An Aldehyde Or Ketone

Mar 09, 2025

-

The Records Maintained By School Employees Should

Mar 09, 2025

-

Did The Sunland Miami Hospital Develop The Code Of Ethics

Mar 09, 2025

-

Insensitive Actions Or Words May Result In

Mar 09, 2025

Related Post

Thank you for visiting our website which covers about Speech Synthesizers Use _____ To Determine Context Before Outputting. . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.