Training The Model Is A Step In _____.

Onlines

Mar 25, 2025 · 6 min read

Table of Contents

Training the Model is a Step in the Machine Learning Process: A Comprehensive Guide

Training the model is a crucial step in the machine learning process. It's where the magic happens – where your algorithm learns from data and develops the ability to make predictions or decisions on unseen data. But what exactly is the machine learning process, and how does model training fit into the bigger picture? This comprehensive guide will delve deep into this vital aspect of machine learning, exploring each stage involved and offering practical insights for those looking to build and deploy successful machine learning models.

The Machine Learning Process: A Bird's-Eye View

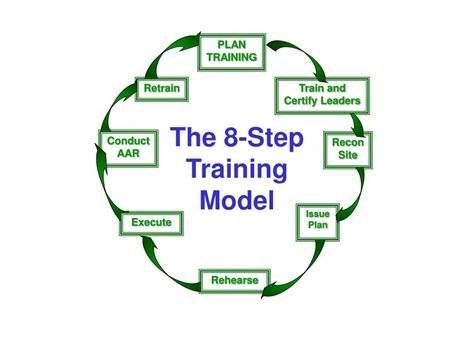

Before diving into model training, let's establish the broader context. The machine learning process is typically represented as a cyclical workflow, involving several key stages:

-

Problem Definition: This initial phase is paramount. You must clearly define the problem you're trying to solve using machine learning. What is the desired outcome? What questions are you trying to answer? This clarity guides all subsequent steps. A poorly defined problem leads to wasted effort and ineffective models.

-

Data Collection and Preparation: This is arguably the most time-consuming stage. You need to gather relevant data from various sources, ensuring its quality, completeness, and consistency. This includes cleaning the data (handling missing values, outliers, and inconsistencies), transforming it into a suitable format for your chosen algorithm (e.g., feature scaling, encoding categorical variables), and splitting it into training, validation, and testing sets. The quality of your data directly impacts the performance of your model.

-

Model Selection: Choosing the right model is crucial. The type of problem you're solving (classification, regression, clustering, etc.) dictates the appropriate algorithm. Factors like data size, dimensionality, and desired accuracy also influence model selection. Experimentation and iterative refinement are often necessary.

-

Model Training: This is where the algorithm learns from the training data. The training process involves feeding the data to the chosen algorithm, which adjusts its internal parameters to minimize errors and improve its predictive ability. This stage requires significant computational resources, especially for complex models and large datasets.

-

Model Evaluation: After training, you need to assess the model's performance using the validation and testing sets. Metrics like accuracy, precision, recall, F1-score (for classification) or RMSE, MAE (for regression) are commonly used to evaluate the model’s effectiveness. This stage helps identify potential issues like overfitting or underfitting.

-

Model Deployment and Monitoring: Once you're satisfied with the model's performance, you can deploy it to a production environment. This could involve integrating it into an application, website, or other system. Continuous monitoring of the model's performance is vital to ensure it continues to provide accurate predictions and adapt to changes in the data over time.

-

Iteration and Refinement: Machine learning is an iterative process. Based on the evaluation and monitoring results, you may need to revisit earlier stages – collecting more data, trying different algorithms, adjusting hyperparameters, or refining the data preprocessing steps. This iterative approach is key to building high-performing models.

Deep Dive into Model Training

Now, let's zoom in on the core topic: model training. This stage is where the algorithm learns patterns and relationships from the data. The process typically involves the following steps:

1. Choosing an Algorithm: The algorithm you select dictates the training process. Different algorithms have different training methods and requirements. Common algorithms include:

- Linear Regression: Predicts a continuous value based on a linear relationship between features and the target variable.

- Logistic Regression: Predicts the probability of a binary outcome.

- Support Vector Machines (SVMs): Finds the optimal hyperplane to separate data points into different classes.

- Decision Trees: Builds a tree-like structure to classify or regress data.

- Random Forests: An ensemble method that combines multiple decision trees to improve accuracy.

- Neural Networks: Complex models inspired by the human brain, capable of learning highly non-linear relationships.

2. Defining Hyperparameters: Hyperparameters are settings that control the learning process. They are not learned from the data but are set before training begins. Examples include:

- Learning rate: Determines the step size taken during gradient descent (an optimization algorithm).

- Number of trees (for Random Forests): Controls the complexity of the model.

- Number of layers and neurons (for Neural Networks): Impacts the model's capacity to learn complex patterns.

- Regularization parameters: Prevent overfitting by penalizing complex models.

3. The Training Loop: The core of the training process is an iterative loop that involves the following steps:

- Forward Pass: The algorithm processes the input data and makes predictions.

- Loss Calculation: The difference between the predictions and the actual target values is calculated using a loss function (e.g., mean squared error, cross-entropy).

- Backpropagation: The error is propagated back through the network (for algorithms like neural networks) to update the model's parameters.

- Optimization: An optimization algorithm (e.g., gradient descent, Adam) adjusts the model's parameters to minimize the loss function.

- Iteration: This process repeats for multiple epochs (passes through the entire training dataset) until the model's performance converges or reaches a predefined stopping criterion.

4. Addressing Overfitting and Underfitting: Two common challenges in model training are overfitting and underfitting.

-

Overfitting: The model learns the training data too well, including noise and irrelevant details, resulting in poor performance on unseen data. Techniques to address overfitting include: regularization, cross-validation, dropout (for neural networks), and using simpler models.

-

Underfitting: The model is too simple to capture the underlying patterns in the data, resulting in poor performance on both training and unseen data. Techniques to address underfitting include: using more complex models, adding more features, or collecting more data.

5. Monitoring Training Progress: It's essential to monitor the training process to ensure it's progressing as expected. This typically involves tracking the loss function and evaluation metrics on the validation set during training. Visualizing the training process (e.g., plotting the loss curve) can provide valuable insights into the model's behavior.

Advanced Techniques in Model Training

Beyond the fundamental steps outlined above, several advanced techniques can significantly improve model training:

-

Transfer Learning: Leveraging pre-trained models on large datasets to accelerate training and improve performance on a smaller, related dataset. This is especially useful when dealing with limited data.

-

Ensemble Methods: Combining multiple models to improve prediction accuracy and robustness. Bagging, boosting, and stacking are common ensemble techniques.

-

Hyperparameter Tuning: Systematically searching for the optimal hyperparameter values to maximize model performance. Techniques include grid search, random search, and Bayesian optimization.

-

Data Augmentation: Artificially increasing the size of the training dataset by creating modified versions of existing data. This is particularly helpful for image and text data.

Conclusion: Training the Model – A Journey Towards Intelligent Systems

Training the model is not an isolated step but a critical component within the broader machine learning process. It's where the algorithm transforms from a set of mathematical equations into a predictive or decision-making tool. By carefully selecting the right algorithm, optimizing hyperparameters, and addressing potential issues like overfitting and underfitting, you can build high-performing models capable of solving real-world problems. Remember that the journey of building a successful machine learning model is iterative – embrace experimentation, continuous monitoring, and a commitment to refining your approach to achieve optimal results. The process is challenging but rewarding, leading to the creation of intelligent systems that can transform industries and solve complex challenges. The continuous evolution of machine learning techniques ensures that this journey remains exciting and constantly offers opportunities for innovation and improvement.

Latest Posts

Latest Posts

-

Simone De Beauvoir The Second Sex Summary

Mar 26, 2025

-

To Kill A Mockingbird Chapter 30 Summary

Mar 26, 2025

-

5 2 Warm Up Learning Resource Knowledge Answer Key

Mar 26, 2025

-

Summary Of Book 9 Of The Iliad

Mar 26, 2025

-

You Want To Organize Your Calendar Into Four Categories

Mar 26, 2025

Related Post

Thank you for visiting our website which covers about Training The Model Is A Step In _____. . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.