Which Of The Following Is Not True About Deep Learning

Onlines

Mar 23, 2025 · 6 min read

Table of Contents

Which of the following is NOT true about Deep Learning? Debunking Common Myths

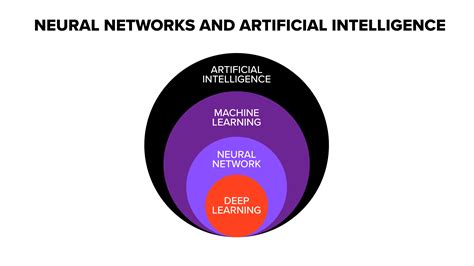

Deep learning, a subfield of machine learning, has taken the world by storm. Its applications span numerous fields, from self-driving cars and medical diagnosis to fraud detection and natural language processing. However, despite its impressive achievements, several misconceptions surround deep learning. This article aims to dispel these myths, focusing on statements that are not true about deep learning. We'll explore the limitations, challenges, and often misunderstood aspects of this powerful technology.

Myth 1: Deep Learning Requires Massive Datasets for Success

While it's true that deep learning models, particularly large ones, often benefit from massive datasets, it's not universally true that they require them. This is a significant misconception. The need for large datasets is often exaggerated. The size of the dataset required depends heavily on several factors, including:

- Model Complexity: Simpler models may achieve reasonable performance with smaller datasets. More complex models, with a higher number of parameters, generally require more data to avoid overfitting.

- Data Quality: High-quality, meticulously labelled data is far more valuable than a vast amount of noisy or poorly labelled data. A smaller, cleaner dataset can often outperform a larger, messy one.

- Transfer Learning: Transfer learning techniques allow pre-trained models (trained on massive datasets) to be fine-tuned on smaller, task-specific datasets. This significantly reduces the need for massive datasets from scratch.

- Data Augmentation: Techniques like image rotation, flipping, and cropping can artificially increase the size of a dataset, improving model robustness and reducing the reliance on excessively large datasets.

Therefore, while large datasets are advantageous, they are not an absolute necessity for successful deep learning applications. Clever data engineering, model selection, and transfer learning can often yield impressive results with more modest datasets.

Myth 2: Deep Learning Models are Always "Black Boxes"

The "black box" nature of deep learning is a frequently cited concern. It's often stated that we cannot understand how these complex models arrive at their predictions. While it's true that interpreting the internal workings of very deep neural networks can be challenging, it's not accurate to say they are always opaque. Significant progress has been made in developing techniques for explaining deep learning models' decisions. These techniques include:

- Saliency Maps: These highlight the parts of the input data that most influenced the model's prediction, providing visual insights into the decision-making process.

- Layer-wise Relevance Propagation (LRP): This method propagates the prediction's relevance back through the network layers, identifying the features that contributed most to the final output.

- Attention Mechanisms: These mechanisms allow models to focus on specific parts of the input data, making it easier to understand which aspects were most important for the prediction.

- Explainable AI (XAI): This burgeoning field is dedicated to developing methods and tools for making AI models, including deep learning models, more transparent and interpretable.

While complete transparency may remain a challenge for the most complex models, the development of these interpretability techniques is gradually lifting the "black box" lid.

Myth 3: Deep Learning Requires Specialized Hardware

While it's true that deep learning models can benefit significantly from specialized hardware like GPUs and TPUs, which accelerate training and inference, it's not true that specialized hardware is always a requirement. Deep learning can be, and is, performed on standard CPUs. The trade-off, of course, is speed. Training large models on CPUs can take significantly longer than on GPUs or TPUs. However, for smaller models and less demanding tasks, CPUs are perfectly adequate. Furthermore, cloud computing services provide access to powerful GPUs and TPUs without requiring significant upfront investment in specialized hardware. This makes deep learning accessible to a broader range of researchers and developers.

Myth 4: Deep Learning Automatically Solves Every Problem

This is perhaps the most significant misconception. Deep learning is a powerful tool, but it's not a panacea. It's not a "plug-and-play" solution that automatically solves every problem. Its success depends heavily on several critical factors:

- Data Availability and Quality: As discussed earlier, the quality and quantity of data significantly impact performance. Deep learning models need sufficient, relevant, and well-labeled data to learn effectively.

- Problem Suitability: Deep learning is particularly well-suited for tasks involving unstructured data, such as images, text, and audio. However, it's not always the best approach for problems involving structured data or tasks requiring explicit reasoning.

- Computational Resources: Training complex deep learning models can be computationally intensive and require significant resources.

- Expertise: Developing and deploying successful deep learning models requires specialized knowledge and expertise in areas such as data preprocessing, model architecture selection, hyperparameter tuning, and model evaluation.

Applying deep learning blindly without careful consideration of these factors is likely to yield disappointing results.

Myth 5: Deep Learning is Always More Accurate Than Other Methods

While deep learning often achieves state-of-the-art performance on various tasks, it's not universally superior to other machine learning methods. The best approach depends heavily on the specific problem, the available data, and the desired trade-offs between accuracy, computational cost, and interpretability. In certain scenarios, simpler models like linear regression, support vector machines, or decision trees might be more appropriate and even more accurate than a complex deep learning model. Choosing the right algorithm is a critical aspect of successful machine learning, and deep learning should be considered one tool among many in the machine learning arsenal.

Myth 6: Deep Learning Requires Extensive Programming Skills

While a strong understanding of programming is beneficial, it's not necessarily true that you need extensive programming skills to work with deep learning. Several high-level libraries and frameworks, such as TensorFlow, PyTorch, Keras, and scikit-learn, simplify the process significantly. These frameworks provide pre-built functions and tools that abstract away many of the low-level details, making deep learning more accessible to users with less extensive programming experience. Furthermore, many cloud-based platforms offer user-friendly interfaces that allow users to build and deploy deep learning models with minimal coding.

Myth 7: Once Trained, a Deep Learning Model Requires No Maintenance

This is a significant misunderstanding. Once a deep learning model is trained and deployed, it's not a "set-it-and-forget-it" situation. Deep learning models require ongoing maintenance and monitoring. This includes:

- Monitoring Performance: Regularly evaluating the model's performance on new data is essential to detect any degradation in accuracy or unexpected behavior.

- Retraining: As new data becomes available, retraining the model with updated data is often necessary to maintain its accuracy and relevance.

- Addressing Concept Drift: Concept drift occurs when the underlying data distribution changes over time, impacting the model's accuracy. Regular retraining or adaptation strategies are essential to address concept drift.

- Security: Deep learning models can be vulnerable to adversarial attacks, where malicious inputs are designed to mislead the model. Regular security audits and defenses are crucial.

Conclusion: A Balanced Perspective on Deep Learning

Deep learning is a transformative technology with remarkable potential, but it's crucial to have a balanced and realistic understanding of its capabilities and limitations. This article has debunked several common myths, highlighting the nuances and challenges involved in applying deep learning effectively. While it's a powerful tool, it's not a magic bullet. Successful deep learning applications require careful planning, appropriate data, suitable model selection, and ongoing monitoring and maintenance. By understanding these aspects, we can leverage the power of deep learning responsibly and effectively. Remember that deep learning is just one piece of the larger puzzle of Artificial Intelligence, and a holistic approach considering other techniques is often necessary for optimal results. Don't fall prey to hype; embrace the potential while acknowledging the inherent complexities and limitations.

Latest Posts

Latest Posts

-

Romeo And Juliet Workbook Answers Pdf

Mar 24, 2025

-

Chapter 7 Things Fall Apart Summary

Mar 24, 2025

-

Forensic Science Processes Securing And Packaging Evidence

Mar 24, 2025

-

Your Customer Service Today Has Been Admirable

Mar 24, 2025

-

What Is The Theme Of Through The Tunnel

Mar 24, 2025

Related Post

Thank you for visiting our website which covers about Which Of The Following Is Not True About Deep Learning . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.