Which Type Of Splunk Query Searches Through Unstructured Log Records

Onlines

Apr 03, 2025 · 6 min read

Table of Contents

Which Splunk Query Searches Through Unstructured Log Records?

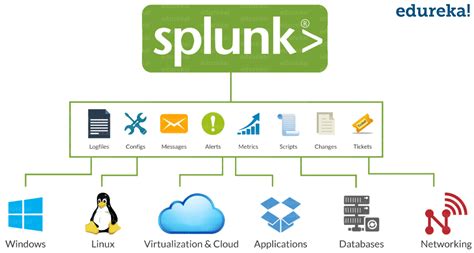

Splunk's power lies in its ability to ingest and analyze vast quantities of machine-generated data, including structured and unstructured log records. While structured data neatly fits into predefined fields, making querying straightforward, unstructured data presents a unique challenge. This article dives deep into the Splunk queries best suited for navigating the complexities of unstructured log records, helping you unlock valuable insights hidden within your data.

Understanding Unstructured Log Data

Unstructured log data lacks a predefined format. Unlike structured logs with clearly defined fields (e.g., timestamp, severity, message), unstructured logs are typically free-form text. This means the information isn't easily parsed or categorized automatically. Examples include:

- Application Logs: Logs from custom applications that don't follow a standard logging format. They might contain error messages, stack traces, or free-text descriptions.

- Network Logs: Raw packet captures or network flow data without standardized field separation.

- Security Logs: Logs from intrusion detection systems or security information and event management (SIEM) solutions that contain narrative descriptions of security events.

- System Logs: System messages that lack consistent formatting across different operating systems or applications.

Splunk's Approach to Unstructured Data

Splunk excels at handling unstructured data through its powerful parsing capabilities and flexible querying language. The key lies in understanding how to extract meaningful information from the free-form text using various techniques:

-

Regular Expressions (Regex): The cornerstone of unstructured data analysis in Splunk. Regex allows you to define patterns to match specific sequences of characters within the log lines. This is crucial for extracting key pieces of information from seemingly chaotic text.

-

Field Extractions: Splunk allows you to define field extractions using regex, effectively structuring unstructured data. Once fields are extracted, you can then use traditional structured query methods for efficient searching and analysis.

-

Tokenization: Splunk breaks down log lines into individual words or tokens. This can be beneficial for keyword-based searches, particularly when dealing with data lacking clear field separators.

-

Machine Learning (ML): Splunk's machine learning toolkit can help identify patterns and anomalies within unstructured data, even without pre-defined fields. This is particularly useful for anomaly detection and security monitoring.

Essential Splunk Queries for Unstructured Logs

The following queries demonstrate various approaches to effectively search and analyze unstructured log records in Splunk:

1. Using regex for Simple Pattern Matching

This is the most fundamental approach. Let's say you have application logs with error messages that consistently begin with "ERROR:". You can use a simple regex to find all lines containing such errors:

index=app_logs "ERROR:"

This query uses double quotes to search for the literal string "ERROR:". However, for more complex patterns, you'll need a more robust regex:

index=app_logs "ERROR:.*"

This query uses .* to match any character (.) zero or more times (*), capturing all the text after "ERROR:".

2. Extracting Fields with rex

The rex command allows you to extract data from your logs based on regular expressions and create new fields. This significantly improves your ability to analyze and filter unstructured data. Suppose your logs contain error messages with error codes in the format "Error Code: ABC123XYZ", you can extract the error code into a new field:

index=app_logs | rex field=_raw "Error Code:\s*(?\w+)" | table _time, errorCode

This command uses rex to extract the error code, capturing it into a field named errorCode. The (?<errorCode>\w+) part is a named capture group, assigning the matched text to the errorCode field.

3. Combining rex with Other Commands for Advanced Analysis

You can combine rex with other Splunk commands to perform more sophisticated analyses. For instance, let's say you want to find all errors related to a specific module and count their occurrences:

index=app_logs

| rex field=_raw "Error Code:\s*(?\w+)\s*Module:\s*(?\w+)"

| stats count by errorCode, module

| where module="payment"

This query first extracts the error code and module name, then uses the stats command to count the occurrences of each combination, finally filtering the results to only show those related to the "payment" module.

4. Leveraging transaction for Multi-Line Log Analysis

Unstructured logs often span multiple lines. The transaction command is essential for handling such cases. Imagine you're tracking a specific transaction across several log entries:

index=transaction_logs

[transaction startswith="Transaction Started" endswith="Transaction Completed"]

| stats count

This query groups log lines into transactions that start with "Transaction Started" and end with "Transaction Completed," allowing you to analyze the entire transaction lifecycle.

5. Using spath for JSON Data within Unstructured Logs

If your unstructured logs contain JSON data, the spath command provides a convenient way to extract information from JSON objects without needing complex regex. Assuming a log line includes a JSON payload:

index=json_logs

| spath output=json_data path=errors.errorCode

| table _time, json_data

This query uses spath to extract the value of errors.errorCode from the JSON data and displays it in a table.

6. Keyword Search with Wildcards

For simple keyword searches when the exact phrasing is unknown, use wildcards:

index=app_logs "*error*"

This will find any log lines containing the word "error" or variations of it (e.g., "errors", "errored").

7. Utilizing Splunk's Machine Learning Capabilities

Splunk's ML capabilities can detect patterns in unstructured logs that might be missed by traditional queries. For example, you can use anomaly detection algorithms to identify unusual spikes in error messages or unusual activity:

index=app_logs

| anomalydetection model=TimeSeries field=error_count

(Note: This requires configuring a TimeSeries anomaly detection model beforehand).

Optimizing Unstructured Log Queries

For optimal performance and accurate results:

- Use efficient regular expressions: Avoid overly complex or ambiguous regex patterns, as they can significantly slow down query execution.

- Index your data appropriately: Ensure your data is indexed in a way that facilitates efficient searching and filtering. Consider using different indexes for different types of unstructured logs.

- Use field extractions: Extracting fields from unstructured data simplifies subsequent querying and analysis.

- Filter your searches: Whenever possible, narrow down the scope of your queries using time ranges, specific indexes, or other filters.

- Utilize Splunk's built-in functions: Take advantage of Splunk's built-in functions for data manipulation and analysis to avoid unnecessary complexity in your queries.

- Monitor query performance: Use Splunk's performance tools to monitor the execution time and resource usage of your queries. Identify and optimize slow queries.

Conclusion

Analyzing unstructured log records can be challenging, but Splunk provides a powerful set of tools to effectively navigate this type of data. By mastering regular expressions, utilizing field extractions, leveraging the transaction and spath commands, and incorporating machine learning, you can unlock valuable insights hidden within your unstructured logs and make informed decisions about your applications, systems, and security. Remember to consistently optimize your queries for efficiency and accuracy, ensuring you get the most out of your Splunk investment. Continuously refining your Splunk querying skills is essential for maximizing the value of your log data.

Latest Posts

Latest Posts

-

Comparing Presidents Foreign And Domestic Policies

Apr 04, 2025

-

Which Is The Best Strategic Therapeutic Consideration For Older Adults

Apr 04, 2025

-

Accounting Information Is Considered To Be Relevant When It

Apr 04, 2025

-

Answers To Cpr Test American Red Cross

Apr 04, 2025

-

Assessment Of A Patient With Hypoglycemia Will Most Likely Reveal

Apr 04, 2025

Related Post

Thank you for visiting our website which covers about Which Type Of Splunk Query Searches Through Unstructured Log Records . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.