Modern Processors Often Have ____ Levels Of Cache.

Onlines

Mar 29, 2025 · 6 min read

Table of Contents

Modern Processors Often Have Three Levels of Cache: Understanding CPU Cache Hierarchy

Modern processors boast impressive speeds, but their raw processing power would be significantly hampered without a sophisticated system of memory caching. Understanding cache memory is crucial to grasping the performance of modern computing. This article delves deep into the intricacies of CPU cache, specifically focusing on the prevalent three-level cache hierarchy found in most contemporary processors. We’ll explore the function of each level, their size differences, speed variations, and the impact this hierarchical structure has on overall system performance.

The Crucial Role of CPU Cache

Before diving into the specifics of the three levels, let’s establish the fundamental importance of CPU cache. The processor’s speed vastly outpaces the speed of accessing data from main memory (RAM). This speed discrepancy creates a bottleneck – the processor spends considerable time waiting for data to arrive from RAM. Cache memory acts as a high-speed buffer, storing frequently accessed data closer to the processor. This dramatically reduces the time it takes for the CPU to retrieve instructions and data, leading to a significant performance boost.

Think of it like this: Imagine a chef preparing a meal. The kitchen represents the main memory (RAM), and the chef is the processor. Instead of constantly going back and forth to the kitchen for ingredients, the chef keeps frequently used ingredients (data) within easy reach on their countertop (cache). This significantly speeds up the cooking process (program execution).

The Three Levels: L1, L2, and L3 Cache

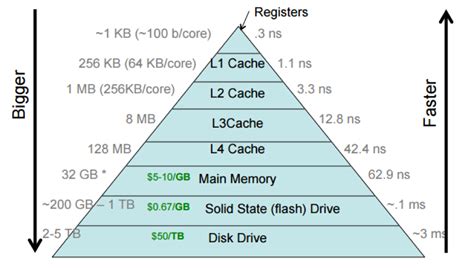

Most modern CPUs employ a three-level cache hierarchy: L1, L2, and L3 cache. Each level plays a distinct role, differing in size, speed, and access times. Let's explore each level individually:

L1 Cache: The Fastest, Smallest Cache

L1 cache is the fastest and smallest level of cache. It's integrated directly onto the processor die, meaning it's physically closest to the CPU cores. This proximity allows for extremely fast access times, typically measured in single-digit nanoseconds. Because of its small size (typically ranging from 32KB to 256KB per core), L1 cache only stores the most frequently accessed data at any given moment.

L1 cache is further divided into two parts:

- L1 Data Cache: Stores data being actively used by the processor.

- L1 Instruction Cache: Stores the instructions the processor is currently executing.

This separation allows for parallel access to both data and instructions, optimizing processing speed. The speed advantage of L1 cache is immense, leading to a noticeable performance improvement in applications that benefit from rapid data retrieval.

L2 Cache: Bridging the Gap

L2 cache is larger than L1 cache but slower. It acts as a bridge between L1 cache and the main memory (RAM). Its size varies considerably depending on the processor, ranging from a few megabytes to several megabytes per core. Access times are typically in the tens of nanoseconds.

L2 cache is often referred to as a second-level buffer. When the processor needs data that isn't found in L1 cache, it searches L2 cache. If the data is present, it's retrieved relatively quickly. If not, the processor accesses the larger, slower main memory (RAM). The larger size of L2 cache allows it to store more data than L1, reducing the frequency of main memory accesses.

The design of L2 cache often involves sophisticated algorithms to predict which data will be needed next, further optimizing access times.

L3 Cache: The Shared Resource

L3 cache represents the largest and slowest level of the cache hierarchy. Unlike L1 and L2 caches, which are typically dedicated to individual cores, L3 cache is usually shared among all cores within a processor. This shared nature allows different cores to access the same data, improving the efficiency of multi-threaded applications.

L3 cache sizes can range from several megabytes to tens of megabytes. Access times are considerably slower than L1 and L2, generally in the hundreds of nanoseconds. Its large capacity makes it crucial for storing frequently used data across multiple cores, minimizing main memory access. Think of it as a common workspace where multiple chefs (cores) can share ingredients (data).

The inclusion of L3 cache significantly enhances the performance of multi-core processors, especially those running applications that demand frequent inter-core communication.

Cache Coherence and Consistency

Maintaining data consistency across multiple levels of cache and among multiple cores is crucial. Cache coherence protocols ensure that all caches maintain consistent copies of the same data. When a core modifies data in its L1 cache, the changes must be propagated to L2 and L3 caches, ensuring that all cores access the most up-to-date information. This complex process relies on sophisticated hardware mechanisms that transparently handle data updates and maintain consistency across the entire cache hierarchy. Failure to maintain coherence would lead to unpredictable program behavior and potentially corrupt data.

Impact of Cache Size and Speed on Performance

The size and speed of each cache level significantly influence overall system performance. A larger cache generally leads to fewer main memory accesses, improving speed. Similarly, faster cache speeds directly translate to quicker data retrieval times. However, increasing cache size and speed comes at a cost – higher power consumption and increased chip area. The optimal balance between size, speed, and cost is a crucial consideration in processor design.

Modern processor manufacturers constantly strive to improve cache performance through architectural innovations. These include advancements in cache controllers, improved cache line management, and the implementation of advanced algorithms to predict data access patterns.

Beyond Three Levels: Specialized Caches

While the three-level hierarchy (L1, L2, L3) is dominant in most modern processors, some architectures incorporate additional specialized caches. For example, some processors feature dedicated caches for specific instructions or data types to further enhance performance. These specialized caches might include:

- Translation Lookaside Buffer (TLB): A cache that stores page table entries to speed up virtual-to-physical address translation.

- Instruction TLB: Specifically caches translations for instructions.

- Data TLB: Specifically caches translations for data.

These specialized caches work alongside the main L1, L2, and L3 caches to optimize overall system performance.

The Future of CPU Cache

The quest for faster and more efficient computing continues to drive innovation in CPU cache design. Future developments are likely to include:

- Increased Cache Sizes: As chip manufacturing technology advances, larger caches will become feasible, potentially blurring the lines between cache levels.

- Advanced Cache Management Algorithms: More sophisticated algorithms will improve prediction accuracy, leading to better cache utilization and improved performance.

- Integration with Other Memory Technologies: Cache architectures might integrate more closely with other memory technologies, such as high-bandwidth memory (HBM), to further accelerate data access.

- Hardware-Assisted Cache Prefetching: More sophisticated hardware prefetching techniques will allow the CPU to anticipate data needs more effectively.

These advancements aim to maintain the performance gains that cache memory provides, keeping pace with the ever-increasing demands of modern computing applications.

Conclusion: Understanding the Foundation of Performance

Understanding the three levels of cache memory – L1, L2, and L3 – is crucial for comprehending the performance characteristics of modern processors. The hierarchical structure of these caches, their varying sizes and speeds, and the intricate mechanisms for maintaining cache coherence are fundamental aspects of computer architecture. As processor design continues to evolve, the role of cache memory will only grow in importance, continuing to be a key factor in delivering the high-speed computing power we rely on today. The ongoing innovations in cache architecture ensure that processors can continue to meet the ever-increasing demands of modern applications and software.

Latest Posts

Latest Posts

-

Ap Calculus Bc Unit 3 Progress Check Mcq

Apr 01, 2025

-

Which Of These Is False About Lithospheric Plates

Apr 01, 2025

-

1 06 Quiz Sinusoidal Graphs Vertical Shift

Apr 01, 2025

-

An Audit Is Defined By Ich E6 As

Apr 01, 2025

-

4 17 Lab Mad Lib Loops

Apr 01, 2025

Related Post

Thank you for visiting our website which covers about Modern Processors Often Have ____ Levels Of Cache. . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.